- Published

In transparency we trust.

"A group of humans behind a transparent glass surrounded by police robots" by Salvador Dali (DALL-E)

Be honest about your mistakes. Be open about your successes. Be transparent to share both.

Transparency in AI systems

“Trust, honesty, humility, transparency and accountability are the building blocks of a positive reputation”. This quote is attributed to Mike Paul who, according to Wikipedia, was a left-handed MLB pitcher who played from 1968 to 1974 for the Cleveland Indians, Texas Rangers and Chicago Cubs. Do AI algorithms need reputation?

"A group of humans behind a transparent glass surrounded by police robots" by Salvador Dali (DALL-E)

Transparency in AI systems refers to the ability of stakeholders to understand how the system works, including how it makes decisions and reaches conclusions. This is important because it allows stakeholders to trust the system and ensures that it is being used ethically and in a way that is aligned with the intended goals. A transparent AI system also allows for better accountability and can help identify potential bias or errors in the system's algorithms. In general, transparency is considered a key aspect of responsible and trustworthy AI.

Transparency medical diagnostic systems

Transparency is particularly important in intelligent medical diagnostic systems. First, accurate and reliable medical diagnoses are crucial for patient health and well-being. If a diagnostic system is not transparent, it may be difficult for doctors and patients to trust its recommendations. Second, transparency can help identify potential bias or errors in the diagnostic system's algorithms, which is especially important in the medical field where even small mistakes can have serious consequences. Finally, transparency is important for ethical reasons. In the medical field, decisions made by AI systems can have life-or-death implications, and it is important that these decisions are made in a transparent and accountable manner. By being transparent, doctors and other medical professionals can help to build trust with their patients and colleagues.

"A group of humans behind a transparent glass surrounded by police robots" by Salvador Dali (DALL-E)

Having established the value of transparency for the credibility of AI systems, how could it be realized? Here are four specific ways in which a system could be transparent:

- Explainability: This refers to the use of AI techniques that can provide interpretable and human-understandable explanations for their decisions. For example, an AI system that uses machine learning to diagnose a medical condition could provide a list of the most important symptoms or factors that it considered in making its recommendation.

- Auditability: This refers to the ability to trace the steps that an AI system took in reaching a conclusion, allowing stakeholders to verify its accuracy and determine whether any errors or biases occurred. For example, an AI diagnostic system could provide a detailed log of the data it used, the algorithms it applied, and the reasoning behind its recommendation.

- User control: This refers to the ability of users, such as doctors or patients, to control and customize the behaviour of the AI system. For example, an AI diagnostic system could allow users to adjust the relative importance of different factors or to provide additional information that the system might not have considered.

- Openness and transparency: The availability of information about the AI system and how it works. For example, an AI diagnostic system could provide detailed documentation about its algorithms, data sources, and performance metrics, allowing stakeholders to understand its strengths and limitations.

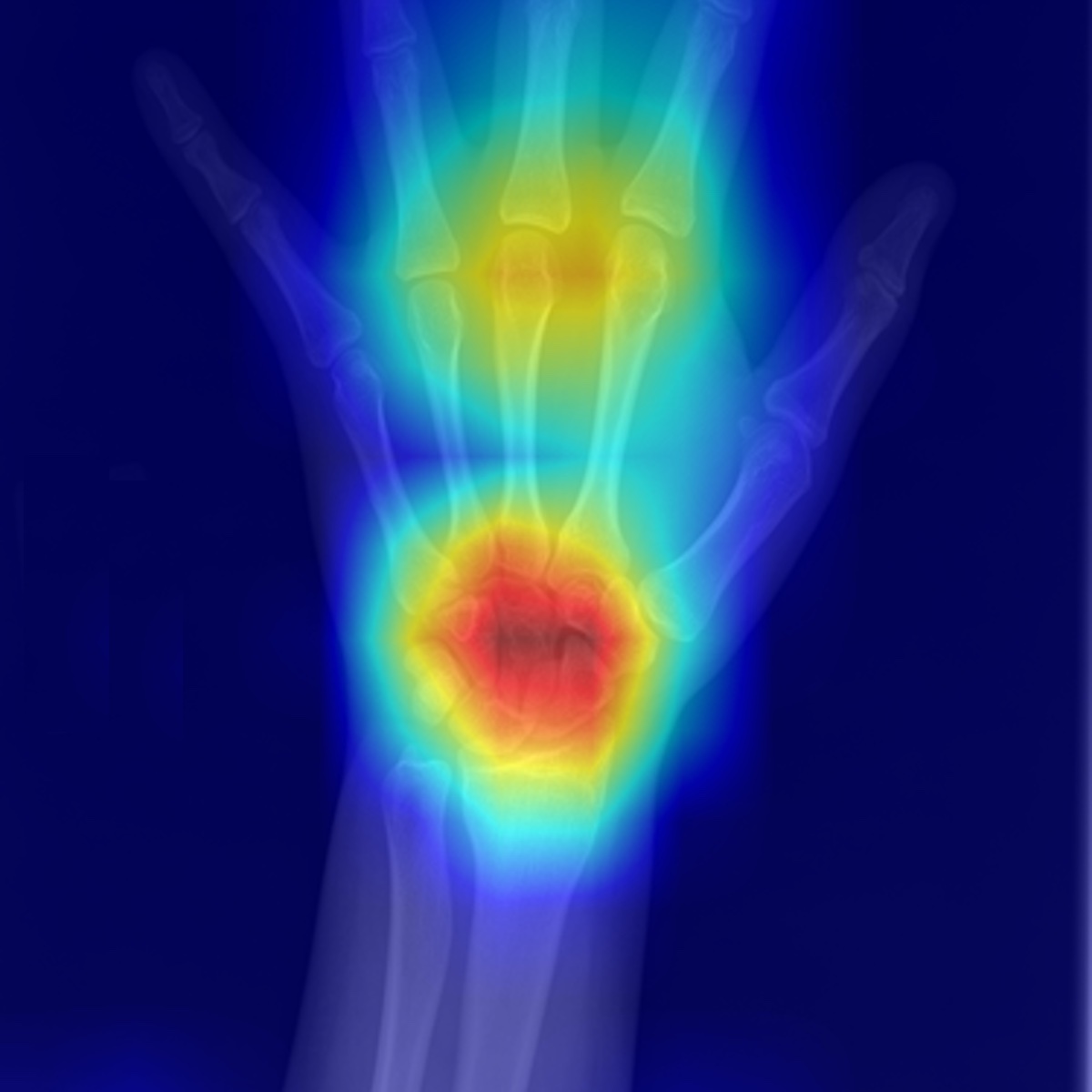

Explain: Heat map

In this application we provide an explainability mechanism known generically as heat map. A heat map is a visual representation of the input data (e.g., an x-ray image) where the individual values represent the relative importance of each input (i.e., each pixel, region, or area of the x-ray image) in the network decision. This can help to explain how the network interprets, processes, and uses input information to make predictions. A heat map might show that certain inputs have a strong influence on the model's output, while others have a weaker influence. This can help to identify which inputs are most important for the network to consider when making predictions, and which can be disregarded or given less weight. Overall, heat maps can provide a useful visual representation of the functioning of a deep learning model.

"Heat map highlighting regions of interests" (AZYRI.com)

Heat maps can provide confidence to an expert clinical eye (trust), it can help explain a decision to a non-specialist professional (explainability), it can reveal or identify symptoms that an expert human eye might miss (increase intelligence), or it could simply expose deficiencies or biases of the intelligent system (acknowledgment of system limitations). Heat maps breeds transparency.

There is still a long way to go to endow AI systems with the superpower of transparency. Help is on the way.